Development aid is changing rapidly. Development evaluation needs to change with it. Why? And how?

PLANNING IN A COMPLEX, DYNAMIC ENVIRONMENT REQUIRES MORE AND DIFFERENT EVALUATIONS

Linear, mechanistic planning for development is increasingly seen as problematic. Traditional feedback loops that diligently check if an intervention is ‘on-track’ in achieving a pre-defined milestone do not work with flexible planning. In their typical form (with quarterly and annual monitoring, mid-term reviews, final evaluations, annual reporting, etc.), they are also too slow to influence decision-making in time.

A new generation of evaluations is needed – one which better reflects the unpredictability and complexityof interactions typically found in systems, one which gives a renewed emphasis to innovation, with prototypes and pilots that can be scaled up, and which can cope with a highly dynamic environment for development interventions.

Indeed, this is an exciting opportunity for monitoring and evaluation to re-invent itself: With linear, rigid planning being increasingly replaced by a more flexible planning approach that can address complex systems, we find now that we need more responsive, more frequent, and ‘lighter’ evaluations that can capture and adapt to rapidly and continuously changing circumstances and cultural dynamics.

We need two things: firstly, we need up-to-the-minute ‘real-time’ or continuous updates on the outcome level; this can be achieved by using, for example, mobile data collection, intelligent infrastructure, or participatory statistics that can ‘fill in’ the time gaps between the official statistical data collections. Secondly, we need to use broader methods that can record results outside a rigid logical framework; one way to do this is through retrospectively ‘harvesting outcome’, an approach that collects evidence of what has been achieved, and works backward to determine whether and how the intervention contributed to the change.

MULTI-LEVEL MIXED METHODS BECOME THE NORM

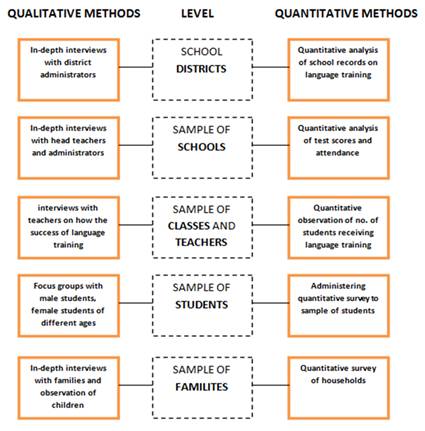

Although quantitative and qualitative methods are still regarded by some as two competing and incompatible options (like two-year olds not yet able to play together, as Michael Quinn Patton has formulated in his recent blog), there is a rapidly emerging consensus that an evaluation based on a single method is simply not good enough. For most development interventions, no single method can adequately describe and analyze the interactions found in complex systems.

Mixed methods allow for triangulation – or comparative analysis – which enable us to capture and cross-check complex realities and can provide us with a full understanding, from a range of perspectives, of the success (or lack of it) of policies, services or programmes.

It is likely that mixed methods will soon become the standard for most evaluations. But the use of mixed methods alone is not enough; they should be applied on multiple levels. What we need is for multi-level mixed methods to become the default approach of evaluation, and for the qualitative-quantitative debate to be declared over.

Source: adapted from Introduction to Mixed Methods in Impact Evaluation, Bamberger 2012, InterAction/The Rockefeller Foundation, Impact Evaluation Notes, No. 3, August 201

OUTCOMES COUNT

Whatever one might think about the merits or fallacies of result-based management, development evaluations have to deal with one consequence: A broad agreement that what ultimately counts – and should therefore be closely monitored and evaluated – are outcomes and impact. That is to say, what matters is not so much how something is done (=outputs, activities and inputs), but what happens as a result. And since the impact is hard to assess if we have little knowledge on outcome results, monitoring and evaluating outcomes becomes key.

There is one problem, however: By their nature, outcomes can be difficult to monitor and evaluate. Typically, data on behaviour or performance change is not readily available. This means that we have to collect primary data.

The task of collecting more and better outcome level primary data requires us to be more creative, or even to modify and expand our set of data collection tools.

Indeed, significant primary data collection will often become an integral part of evaluation. It will no longer be sufficient to rely on the staple of minimalistic mainstream evaluations, the non-random ‘semi-structured interviews with key stakeholders’, the unspecified ‘focus groups’, and so on. Major primary data collection will need to be carried out already prior to or as part of an evaluation process. This will also require more credible and more outcome-focused monitoring systems.

Thankfully, there are many tools becoming available to us as technology develops and becomes more widespread: Already, small, nimble random sample surveys such as LQAS are in more frequent use. Crowdsourcing information gathering or the use of micro-narratives can enable us to collect data that might otherwise be unobtainable through a conventional evaluation or monitoring activity. Another option is the use of ‘data exhaust’ – passively collected data from people’s use of digital services like mobile phones and web content such as news media and social media interactions.

So there is good reason for optimism. The day will soon come when it is standard practice for all evaluations to be carried out by mixed methods at multiple levels, with improved primary data collection enabling us to evaluate what really counts in our interventions: the outcomes and the impact.

Table:

Source: Discussion Paper: Innovations in Monitoring & Evaluating Results, UNDP, 05/11/2013,

Note: This blog was originally published in 2014 as a guest blog in BetterEvaluation.